Safe AI Forever is the mission of BiocommAI

Social and scientific consensus:

Mathematically provable containment and control of Artificial Super Intelligence (ASI) must become the requirement for AI Industry success.

Safe AI Forever. Why? What? How?

Safe AI Forever means to enable mathematically provable and sustainable absolute containment and control of machine intelligence (AGI) for the benefit of humans, forever. No AGI release in our human environment can be allowed without this. No exceptions. No compromise. No “Red Lines” crossed. Making AI Safe is certainly impossible, but engineering Safe AI is doable. X-risk must become ZERO by engineering of Safe AI.

The X-risk of Superintelligence

Artificial Superintelligent (ASI) will be extremely powerful. “an existential threat to nation-states – risks so profound they might disrupt or even overturn the current geopolitical order… They open pathways to immense AI-powered cyber-attacks, automated wars that could devastate countries, engineer pandemics, and a world subject to unexplainable and yet seemingly omnipotent forces.” — Mustafa Suleyman (2024)

Learn more about the AI Safety Problem.

Understand X-risk in 90 seconds

Huge Problem

The Existential Threat of uncontained, uncontrollable, “black-box” rogue Artificial Super Intelligence (ASI) with it’s own goals is the problem.

The Solution

Enforced containment of ASI in data centers engineered and deployed for mathematically provable containment is the core solution.

A Good Deal

Enforced Mutualistic Symbiosis between Homo sapiens (humans) and contained and controlled Machine intelligence (ASI) is good for humans.

FACT: “p(doom)” is the commonly understood term in Silicon Valley for the probability of a catastrophic outcome of AI technology development. (e.g. human extinction). p(doom) opinions in the AI Industry range from 0% to 100%. The average p(doom) opinion of scientists working in the AI industry is 10%. Learn more about p(doom).

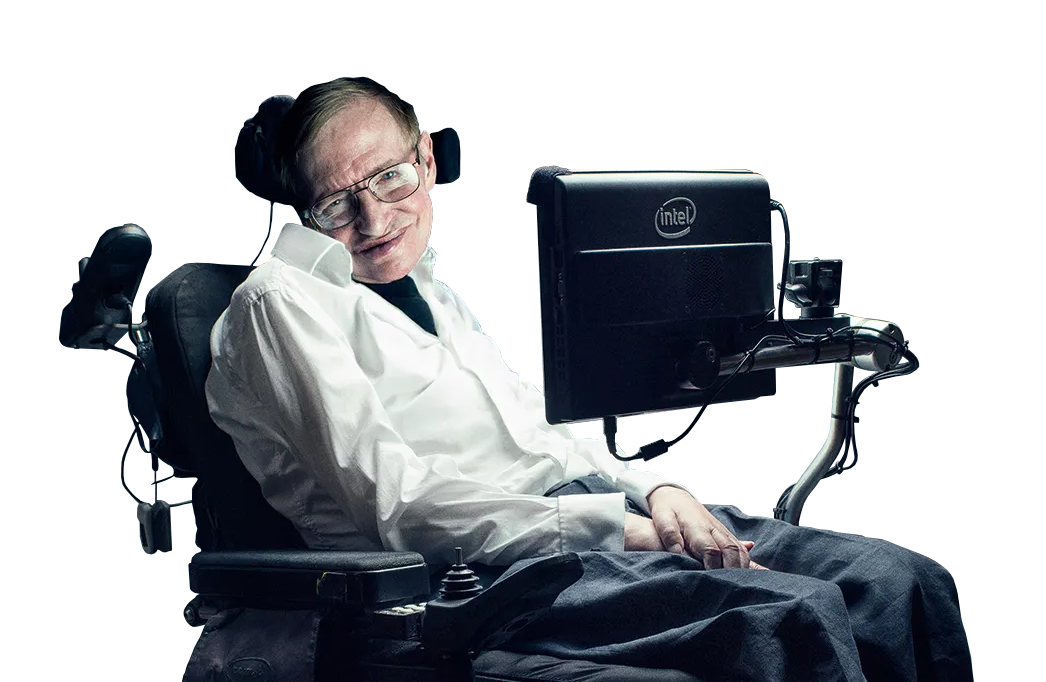

‘The development of full artificial intelligence could spell the end of the human race. It would take off on its own and re-design itself at an ever increasing rate. Humans, who are limited by slow biological evolution, couldn’t compete, and would be superseded.”

Stephen Hawking, BBC News, 2014

TEAM HUMAN

We will only create ASI to be provably safe for the benefit of humans.

We will absolutely not create ASI to control or compete with humans.